Operations leaders across sectors are deploying new automation tools and techniques, in parallel with longer-term IT modernization plans, to quickly digitize the last mile of processes within a company. Combined with advances in IT (including agile software development and microservices) and in organizational design (such as more robust learning platforms and moving beyond the traditional matrix structure with agile methodologies), operations leaders can rapidly turn their ops into tech—tech that they own, manage, and continuously improve—driving cost reductions of as much as 60 percent while improving quality and timeliness as well.

Stay current on your favorite topics

However, many operations teams and their senior leadership set aspirations that are too low and struggle to realize impact even in the first steps of this journey. Lacking a clear target and strong mandate to change their operating model, many companies remain trapped in pilot purgatory: investment is tentative, there is little impact at scale, teams abandon efforts after a few isolated projects, and they focus solely on point solutions, rather than building a true engine for execution and continuous improvement.

Using these tools in a haphazard, non-orchestrated way can lead not just to inefficiency, but to operational chaos: unstable robots that block systems and processes, a rat’s nest of complicated processes that are never challenged or properly redesigned, and managers left unable to manage a virtual workforce. But handled in the right way and in the right sequence, these tools are the first step toward accelerating continuous, tech-enabled redesign in many operational areas, strengthening operational resilience while increasing productivity.

To break through these common failures, businesses must:

- Develop an intuitive ops-to-tech cycle that helps operations teams understand what a continuous improvement engine looks like within the context of automation and the next-generation operating model;

- Identify the biggest challenges that managers typically face when seeking to launch or accelerate the ops-to-tech cycle; and

- Create an effective short-term playbook to help managers overcome these challenges, ensure sustainable results in the short and longer terms, create new opportunities for employees, and change the vision for continuous improvement within the organization.

While companies have a long history of combining tech and ops iteratively, most institutions still struggle with both complex IT and complicated, high-cost, and often error-prone operations.

Lean and other operational-excellence approaches help operations enormously by focusing on the customer, eliminating waste, enhancing transparency, and creating a culture that drives continuous improvement. But such efforts in isolation can be highly constrained by IT systems that can be slow to arrive, costly, and complex to manage. In the meantime, operations must cope with processes that remain highly manual and fragmented.

What the future looks like

Recent changes in technology—notably, process-mining and natural-language-processing tools, robotics (both robotic process automation, or RPA, and robotic desktop automation, or RDA), accessible machine learning and artificial intelligence, improved data-management tools, and microservices defined by APIs (application programming interfaces)—have suddenly created a new set of possibilities. Companies can modernize complicated systems, automate manual steps, and bring data to bear for analysis and insights more quickly to identify and resolve the root causes of inefficiencies or other problems.

Would you like to learn more about our Operations Practice?

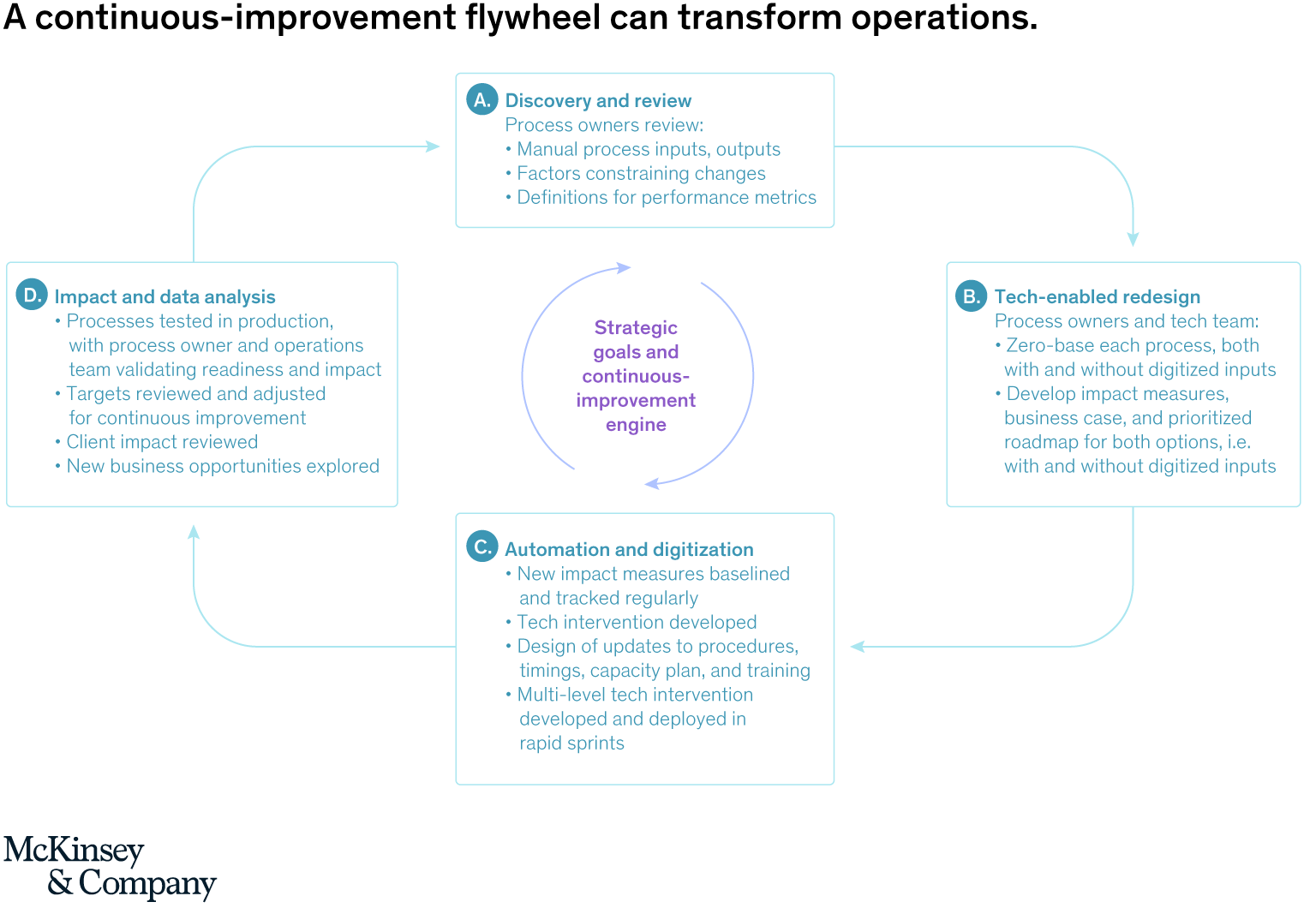

We call this continuous-improvement cycle “turning operations into tech.” It’s a shorthand for codifying all the manual steps and rules that exist in many operations shops, as well as the virtual playbook that exists only in the heads of critical operations personnel. The result is a set of codified instructions that automated bots can easily and quickly test, update, and execute. The automated tools can also create audit trails and generate new data: both bottom-up, for specific work being processed, and top-down, on how the processes are running. This further enables new analysis, insights, and impact.

Companies can use machine vision, process mining, and natural-language processing tools to analyze additional data sources, such as desktop actions, log files, and call transcripts, and provide operators with real-time coaching and a treasure trove of detail for further ad hoc optimization and root cause-elimination analysis. This approach starts a powerful flywheel of continuous improvement that operations teams can drive (exhibit).

The combination of these tools and capabilities, used in the right ways, is revolutionary. We know of several large organizations across industries that are on track to achieve efficiencies of 50 percent or more over the next three to five years. For example, one financial institution has used the flywheel to reduce costs by nearly 45 percent across operations in two years, and is on track for further savings and new business opportunities. Another financial institution was able to redesign and automate its global operations to reduce cost by 50 percent while eliminating extensive duplicate work and downstream errors. In both cases, the institutions will use the savings in part to fund a shift from low-value manual work to high-value, data-driven client service.

In a third example, a bank automated 60 percent of customer payment-request routing. Customers must physically sign these payment requests, so straight-through processing requires several automation capabilities. The bank used the flywheel concept to iteratively increase the scope and effectiveness of automated document processing. Workflow proved to be a critical enabler: consolidating and tracking volumes, logging successes and exceptions, and providing valuable data for capacity management and service-level agreement adherence as manual effort declined over time.

This cycle has become possible only in the last few years, as automation and machine learning have improved dramatically. Development speed has increased substantially and the breadth of processes that can be automated has exploded: not just simple data entry manipulation, but even more knowledge-based processes, such as generating insights from data. One global bank has automated substantial parts of the equity analyst’s role in reading financial reports. Comparing same-store sales, drawing conclusions, and generating commentary are among many largely automated possible tasks.

False starts?

In many operations shops, process improvement can stall because the processes are not well defined; if they are well defined, there might not be enough data to identify a problem’s root causes or pinpoint improvement opportunities. Or a long-expected future platform could bring significant changes to an area or process, so teams wait and see rather than improve today.

Given competing priorities, companies may fail to invest in foundational process mapping and microeconomics. Or they don’t see a strong business case for automating the last mile of company processes, perhaps because the processes in question aren’t frequent or involve comparatively few people. Even when they do build a reasonably complete automation solution, it may be too rigid to allow for updates as business conditions change.

These managers are overlooking the full cost of incomplete automation. For example, they don’t account for the lengthy internal back-and-forth discussions on how to handle a particular exception. These can involve senior people “rediscovering” how to approach the task, trial and error in working through legacy systems, projects bouncing between teams who are unaccustomed to handling lower-volume activities, the risk and cost of fixing errors, and the investment in maintaining incomplete, outdated, and potentially unused training, compliance, and reporting materials. One insurance client estimated that these sorts of challenges inflated actual operations costs by 30 times the initial estimate.

The automation imperative

Getting the most from the technology flywheel

Automation leaders take a different view. Self-documenting process-automation tools provide a solution that’s often less risky and less expensive than relying on a key person’s memory or falling back on manual documentation. At the same time, today’s automation tools provide a fast-enough return on investment to let companies put the right process mapping and instrumentation in place.

As the process runs, it generates data on process breaks and areas of slower performance, kickstarting the ops-as-tech flywheel. Accordingly, the savings aren’t just from eliminating manual work, but also from managing teams with a better view of day-to-day performance challenges and variability. With sufficient flexibility built in, a company can use this information to improve the existing process or de-risk future IT changes. The rule updates required to change the process are often very simple and instantly scalable across related processes. Workers don’t need retraining. Freed from repetitive tasks, experts can focus on making more process improvements and automating lower-volume exceptions.

The global bank mentioned previously has done this very well. Understanding how its customers and partners truly used its services and data proved crucial to unlocking new efficiencies and joint business-model innovation. After automating its client trade and template processing, executives had a robust sense of the types and frequency of exceptions and errors by client, trade type, and product. They used this data to engage customers on how to reduce interaction costs, improve templates, and even gain paid integration work to introduce more straight-through processing. Such “beyond end-to-end” process redesign is increasingly common as companies look upstream and downstream from their customer interactions and internal datasets.

Getting to great

While this next-generation ops-to-tech cycle is simple enough to discuss widely within operations teams, there are several common challenges that we consistently observe in each step.

In discovery and review, a necessary part of identifying opportunities and engaging IT is to achieve the right level of granularity, so that the process owner can truly oversee processes and understand their performance. It doesn’t always go well. When granularity is too high, businesses miss new opportunities to automate, because there are too many manual exceptions. When it’s too low, the complexity (and resulting business case) can look daunting.

Discovery should start at a high level by challenging the need for exceptions. Identify opportunities to simplify, reorganize, and standardize processes—while minimizing exceptions—before investigating the next level of detail. Eliminating variants with low added value is an important opportunity for continuous improvement. Execute this work in rapid sprints, rather than trying to map all cases and exceptions exhaustively on the first iteration.

In tech-enabled redesign, zero-basing a process while keeping the latest automation technologies in mind helps keep ambitions high. A few basic questions structure the task: What’s stopping us from digitizing or automating the processes end to end? Could rules-based activity reduce current exceptions and manual processing? Is any complex thinking required? Can any of the new automation technologies help with this? Is a new digital front-end or set of structured inputs required? Are there policy or regulatory hurdles to straight-through processing? Understanding each potential barrier, together with a realistic view of feasibility and required effort, helps prioritize actions. Even when digitization will take a few years, there are often short-term automation opportunities will reduce the digitization effort’s cumulative risk and implementation cost—and may even prove to be self-funding.

In automation and digitization, exciting technology developments are accelerating the path to impact and generating in-process data that companies can use for continuous improvement. For example, new automation tools and resources—some originating as community-driven efforts, such as forums among automation heads—let companies rapidly solve common challenges based on common configuration standards, best practices, and open-source platforming. Adapters are also available off the shelf, or can be developed in a reusable way to integrate with many common enterprise-software systems. An insurer, for example, was able to reduce the launch time for its automation program by an estimated five months, in part by leveraging best practices it learned through a forum—together with structuring its teams correctly and organizing its IT architecture and naming conventions correctly from the get-go.

In impact and data analysis, operations managers must feel ownership and urgency to successfully drive ops as tech. Advanced-automation tools (sometimes in concert with process-mining and natural-language-processing tools) can help managers analyze more data than traditional sampling exercises allow—pointing to potential opportunities, suggesting priorities, and offering continuous monitoring. Performance targets should be adjusted accordingly, cascaded through all levels of the organization, and owned by the operations team.

Once this redesign flywheel starts, organizations can dream much bigger. Dramatic reductions in cost and increases in reliability and customer-facing capability are only the beginning of what they can achieve.